Imports

It is possible to import data to your project from sources outside of Bloomreach Engagement. These can be CSV or XML files (uploaded through the import wizard or through a URL feed), integrated SQL databases, imports from file storage integration (Google Cloud Storage, SFTP, Amazon S3 and Azure Storage), or data from your analyses in Bloomreach Engagement.

Bloomreach Engagement works with 4 types of data:

Watch this short introductory video about this feature:

Before you start, check the technical reference section of this guide and best practices to understand the requirements and limitations.

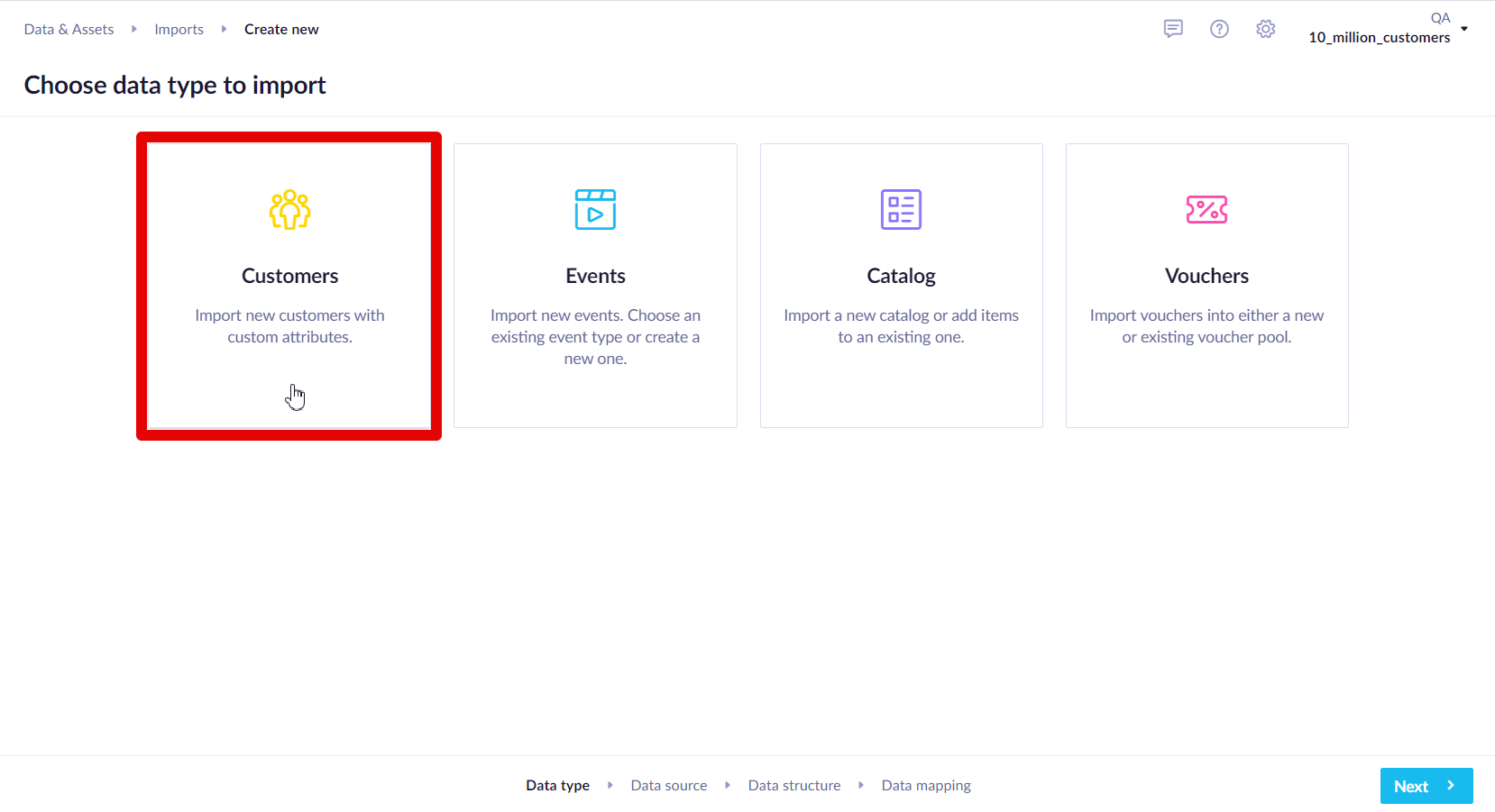

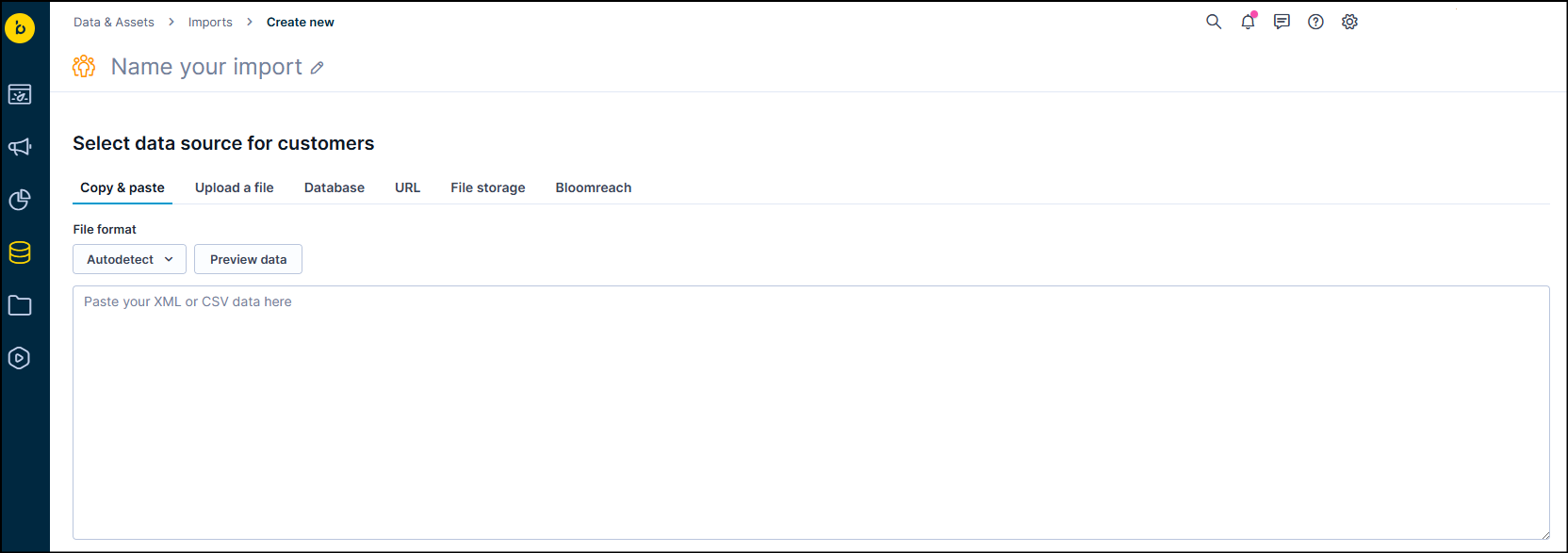

To import new data, go to Data & Assets > Imports > + Create new.

Next, you will be asked to choose which type of data you want to import. This article will now explain the basics that you need to know about imports while guiding you through the import process for each type of data.

Importing customers

In customer imports, columns represent the customer attributes, while each row stands for a single customer. The first row in the data will be taken to identify the attribute. You can change the naming, discard the whole column, or add a new column with a static value later in the data mapping step.

In the row below, you can see the four steps that you will have to go throw when making data imports.

Data source

In this step, you will have to choose your data source. You can import your data from the following sources:

| Copy & paste | You can directly paste your data into the application. (MAX size = 500,000 bytes (500KB)) |

| Upload a file | The file must be in CSV or XML format. You can select a File format or drag&drop the file onto the canvas. (MAX size = 1GB) |

| Database | You can feed the data from one of your integrated databases using the SELECT statements. The most commonly used databases are supported. |

| URL | If your data is saved online, you can create a feed to its URL, for example: https://www.hostname.com/exports/file.csv. It is also possible to input a username and password for HTTP Basic Authentication if required in the form. A password will be securely stored in Bloomreach Engagement, and it will not be visible to other users.When feeding a CSV or XML file from a database through a URL, Bloomreach Engagement uses the following headers: - X-Exponea-Import-Phase "sample" to encoding detection sample request - X-Exponea-Import-Phase "preview" to request a preview which is shown when a user clicks "preview data" in the Bloomreach Engagement import wizard - X-Exponea-Import-Phase "download" to request the download of the full file |

| File storage | Import files from your own SFTP server or Google Cloud Storage bucket. Once you click on this option, click on Select a file storage integration and choose your SFTP, GCS (Google Cloud Storage for Imports and Exports), Amazon S3 or Azure integration from the list. If you don't have any, click on the + button that will take you to set up a new SFTP or GCS integration. |

| Bloomreach Engagement | You can also import data from analyses that you created in Bloomreach Engagement in the current or a different project. |

Important

After selecting your data source, always click on "Preview data" to see if the format looks as expected. If there is an issue, check that correct delimiter and encoding is selected.

Failed import

Note that the notification about a failed import will be sent to the user who last saved the scheduled import and to the everybody having permissionportal-app.imports.update(read more on the Notifications for Imports). This means that the import-failed status might not be sent to the owner of the import.

Imports from file storage

Imports from file storage support all functional requirements for importing data for any use case. Importing data from files in different storage is one of the most secure and convenient ways to transfer data to Bloomreach Engagement automatically. This allows:

- Imports to filter files that should be imported

- Listen to new file uploads

- Ability to not import the same file twice

Further functions:

-

Remembers the state of the folder

-

Bloomreach Engagement remembers all files already uploaded per active import. When you delete a whole import, all remembered files are also wiped.

-

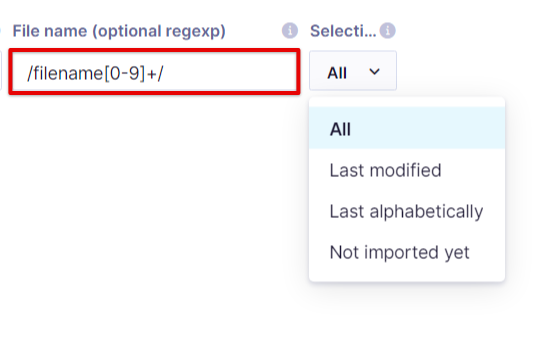

General regex match for any file storage to filter files that should be imported. The enclosing slashes ( / ) are mandatory to identify a regular expression! Example: /filename[0-9]+/

-

General "new file" trigger for any file storage (Bloomreach Engagement checks file storage for new files every 5 minutes and imports those that were not imported yet)

If you need to modify the schema of an existing import (for example, updating the regex pattern or changing the data structure), we strongly recommend making these changes only after all currently scheduled files have been processed. Changing the schema mid-way can cause in-progress or pending import tasks to be cancelled or removed by the system's cleanup job.

File storage limitations

- Scheduling file imports with selection rule All and execution rule New file uploaded is not allowed.

- Scheduling file imports with the selection rule All or Not imported yet has a hard limit of 200 files. Imports attempting to schedule over 200 files will not be allowed to be saved and started. Already imported files also count towards this limit, so we recommend regular cleaning of the files in the file storage to avoid hitting the limit,

Example

Automatic imports - you have a DWH that exports data to file storage on a daily basis (for example, purchases). The exported file name will be different every day (regex match) and can be finished at a different time due to the complexity of the export (new file trigger).

Selection rule

Once you fill in the pre-mapping step for file storage integrations (Amazon S3, SFTP & Google Storage), you can choose the Selection rule.

It applies if there are more files on the storage matching file search:

- All- all matched files will be imported (if already imported files are not removed, it will cause repeated imports)

- Last modified - only 1 last modified file will be imported even when there are multiple files matching the filter and previously imported files will be skipped

- Last alphabetically - only 1 last file, when sorted alphabetically, will be imported even when there are multiple files matching the filter

- Not imported yet - all newly discovered files will be imported, Bloomreach Engagement remembers all files already uploaded per active import. When you delete a whole import, all remembered files are also wiped

The selection rule isn't applied when using the Preview function during the import configuration.

Important

When selecting Bloomreach Engagement as a data source, be aware that analyses' time filter uses the timezone defined in user settings, while import uses a time filter in UTC. This can create discrepancies between the data shown in analysis and the data imported.

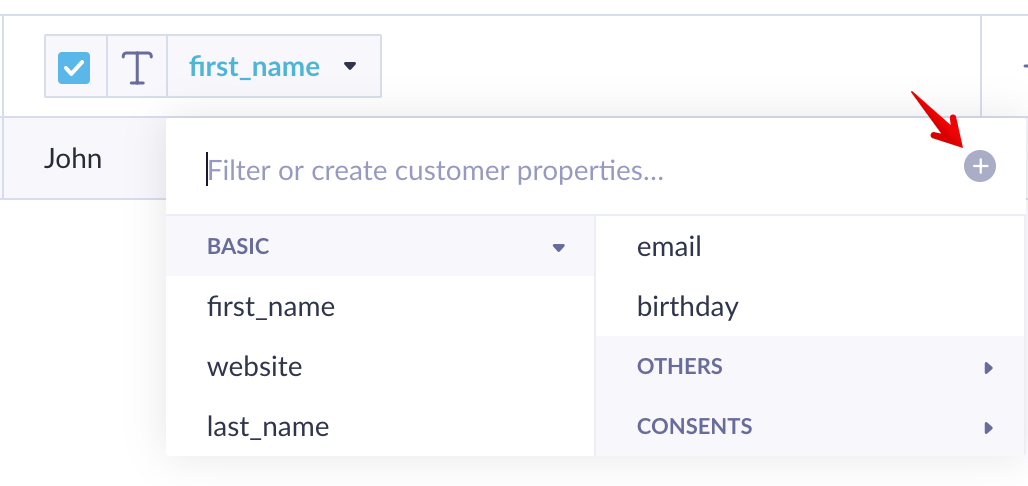

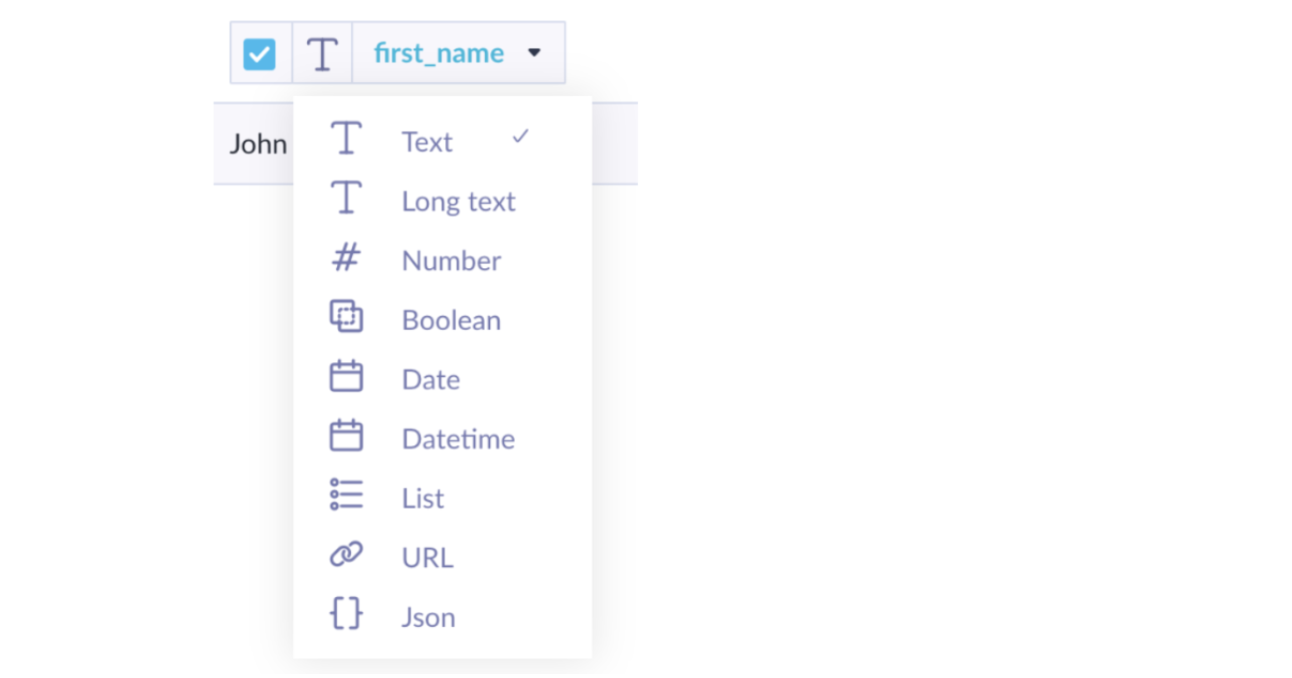

Data mapping

In the dropdown menu for each column name, you can map that attribute to already existing attributes. When mapped to an existing attribute, the name of the column changes the font to blue. Be sure that your imported data have the same structure and formatting as the existing data. You can also create a new attribute altogether. Just type the name and then click on the + sign.

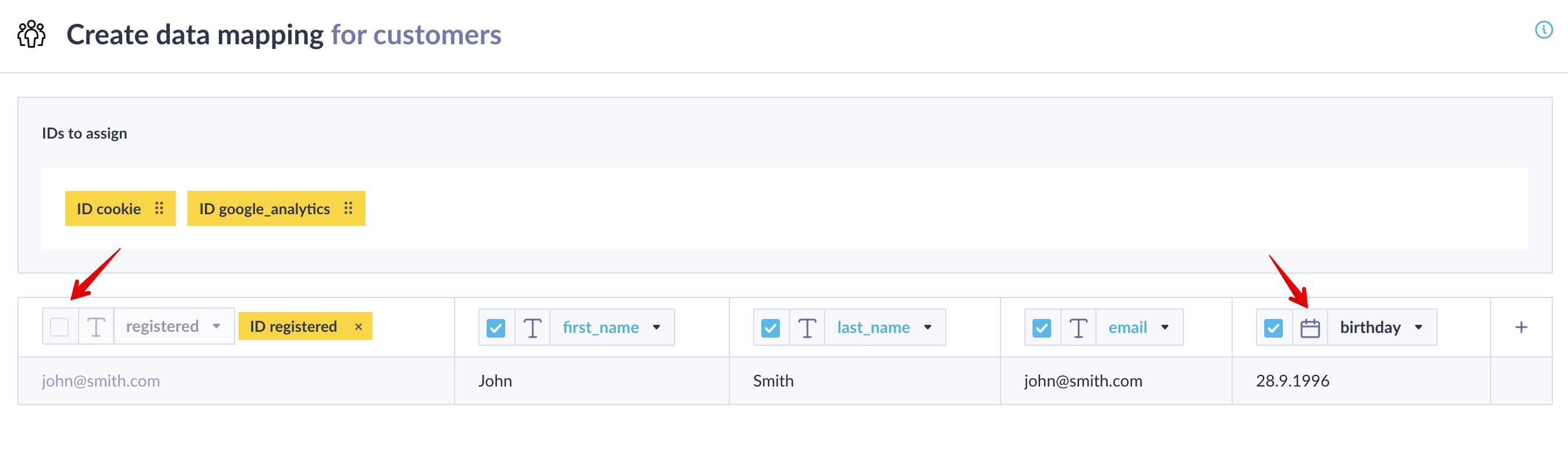

During every import, you need to pair at least one of your columns with one of the identifiers in the yellow boxes (located in the customer IDs section, from which they can be dragged and dropped). For example, for customers, this is the ID registered to identify each row with a customer so Bloomreach Engagement knows where to import those values. However, you do not want to import such a column as a new attribute, so you need to untick the checkbox for that column.

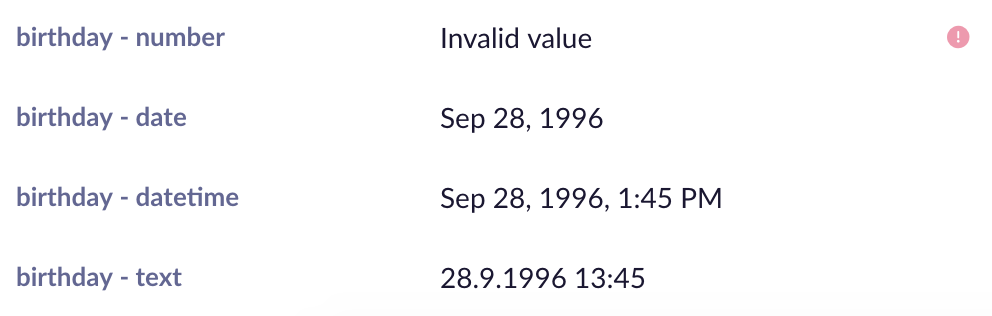

Ensure the correct data type is set for each column (text, number, date, and more). Click on a column's name and select one of the existing attributes to match it with. The data type affects how Bloomreach Engagement treats those values. When filtering data, for example, the data type will decide what type of operators will be shown by default to match that type. It also affects how the values are shown in the customer profile.

Each data type is explained in the Data Manager article. You can also change the data type for each attribute later in the Data Manager. However, be cautious with list values, as these are processed differently when imported under an incorrect data type.

Value "28.9.1996 13:45" imported under different data types and shown in a customer profile.

You can also add a new column with a static value in the import wizard by clicking on the + sign on the very right of the preview table. We recommend always adding a column with the name source and a value describing the source of the data, such as "Import_11 June 2019". This can help later with troubleshooting or orientating your data.

Important

If you want to send emails through Bloomreach Engagement, your customers must have an attribute called

phone.

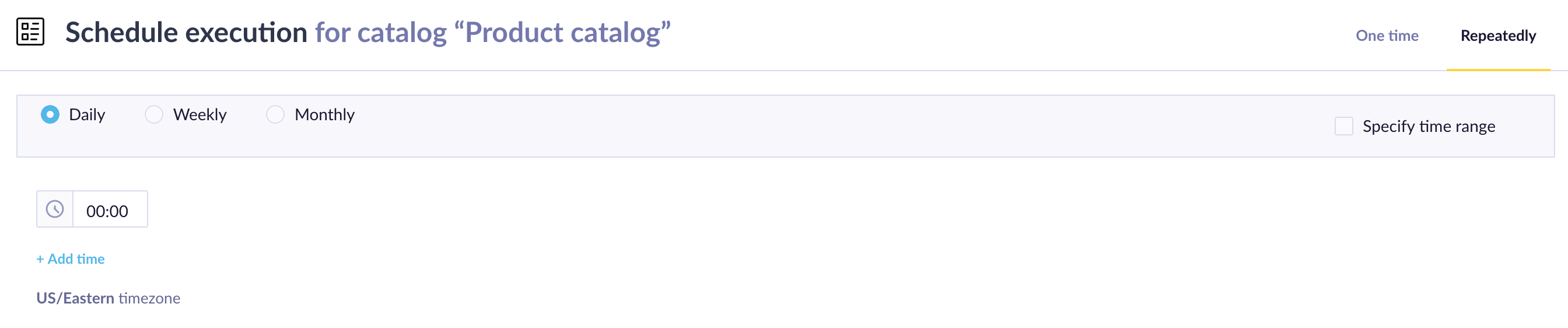

Set repeated import (optional)

If you are uploading from a dynamic source (File Storage, Database, URL, or Bloomreach Engagement), you can schedule a repeated import.

To set a repeated import, get to the last fourth step of the import process and click on "Schedule repeated import" in the top right corner. You can set daily imports to run several times per day, weekly imports to run at one time on several days, and monthly imports to run at one time on several days each month. You can also specify the time range until when you want to keep the repeated imports running.

✅ Run the import

You can check the status in the list of imports when you hit "Refresh." Large files will take some time to get imported.

You can use this CSV to try importing yourself before importing a big file. The screenshot below shows what the correct import with this data should look like.

registered;first_name;last_name;email;birthday

[email protected];John;Smith;[email protected];28.9.1996

"registered" is unchecked to use only for pairing the data with the correct customer. The "birthday" attribute is defined in the date format. As it doesn't have a blue color, it means that such an attribute doesn't exist in our data yet and will be created.

Customer ID error

"No valid customer ID" error occurs either when the customer is missing ID or when it is longer than 256 bytes. To resolve this, check customer IDs in each row in the document from which you are importing the customers. Review this FAQ article to find out more about the most common import issues.

Reimporting customers

There are several ways to add or update customer information to keep your data accurate:

- To overwrite an existing property with new info, include this property in your import with the new data

- To overwrite an existing property with a blank value, include this property in your import with a blank value

- To leave a property’s existing value unchanged, exclude this property from your import

Importing lists

If you have multiple values that you wish to import at the same time, the proper way to import such a list is as follows: ["value1", "value2"]. Use square brackets in which type in the individual values in double quotes, each separated by a comma.

Importing events

You can name your events as you like, but we recommend following our naming standards for the basic events.

Data source

Choose your data source. This step works the same way as data source selection in Customer Imports. Always preview your data before proceeding to make sure it is in the expected correct format.

Data mapping

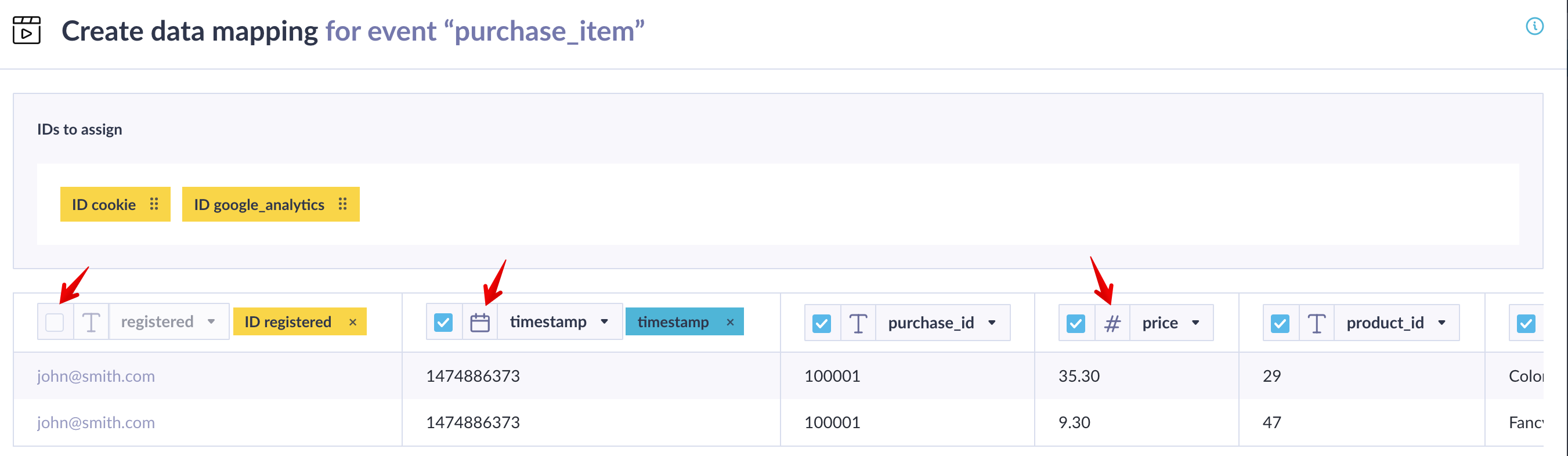

Here, you can map columns, assign customer ID tags and timestamp tags and set correct data types

This is a crucial step explained in detail in the customers´ data mapping section above.

There are 2 columns required for event imports:

- One must contain customer IDs to pair each event with the correct customer. You need to assign the appropriate yellow tag to this column.

- The second required column is

timestampof each event with the blue tag assigned to it. This must be in the datetime (for example, "2020-08-05T10:22:00") or numeric (for example, number of seconds since 1970 would be written as "1596615705". Note that this works only in seconds) format. If only a date is provided, then Bloomreach Engagement will add midnight as the time to the event's timestamp.

Remember that the columns with IDs should not be imported and, hence,e, should be left unchecked.

You can have more columns with different IDs (

registered,cookie). You can map all of them and Bloomreach Engagement will use the one available for a particular customer to pair the event with.registeredhard ID is always prioritized.

Make sure that the correct data type is set for each column (text, number, date). Click on a column's name and select one of the existing attributes to match it with.

Timezone selector

The Timezone selector, located below the data table in the import user interface, is designed to modify the timezone of DateTime values.

This feature exclusively supports ISO8601 DateTime formats. If the DateTime doesn't conform to this format, the feature does not operate.

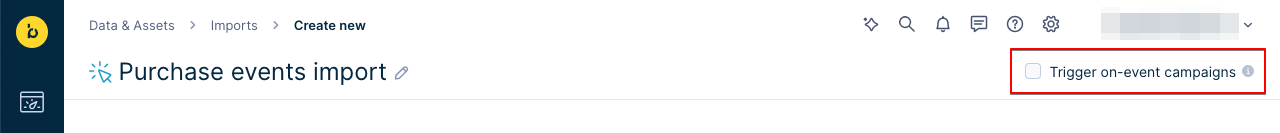

Trigger on-event campaigns (optional)

Optionally, you can check the Trigger on-event campaigns checkbox in the top right. When checked, the imported events will trigger on-event scenarios. This behavior is turned off by default.

Set repeated import (optional)

If you're uploading from a dynamic source (Database, URL, or Bloomreach Engagement), you can schedule a repeated import.

✅ Run the import

You can check the status in the list of imports when you hit "Refresh". Big files will take some time to get imported.

You can use this CSV to try to import yourself before importing a big file. The screenshot below shows what the correct import with this data should look like.

registered;timestamp;purchase_id;total_price;total_quantity

[email protected];1474886373;100001;53.90;3

registered;timestamp;purchase_id;price;product_id;title;quantity;total_price

[email protected];1474886373;100001;35.30;29;Colorful bracelet;1;35.30

[email protected];1474886373;100001;9.30;47;Fancy necklace;2;18.60

"registered" is unchecked to use only for pairing the data with the correct customer. Notice the data types set for other columns.

Imported events cannot be selectively altered or deleted.

Importing catalogs

You can work with 2 types of catalogs in Bloomreach Engagement: general and product catalogs.

General catalog in Bloomreach Engagement is basically a lookup table with one primary key (item_id). It can store any table with fixed columns and can be used for various advanced use cases.

Product catalog is designed to store the database of your products. It has some additional settings and IDs like product_id, title, size. These are to ensure that the attributes are properly identified by Bloomreach Engagement and can be used for recommendations.

You can select one of your existing catalogs to import additional/new data or create a new catalog by typing its name and clicking on the arrow.

Naming your catalog

Your selected catalog name should be without spaces to avoid an error.

Data source

Choose your data source. This step works the same way as data source selection in Customer Imports. Always preview your data before proceeding to make sure it is in the expected correct format.

Recommended data format for item_id

item_idIt is recommended to use item_id's that do not include /. Due to the way the endpoint is decomposed, future editing using our Catalog API will not work with item_id's containing /.

If you attempt to edit catalog items with a '/' in their item_id, you'll receive a 403 error as it disrupts URL editing. We strongly encourage avoiding the inclusion of '/' in your catalog item_id's.

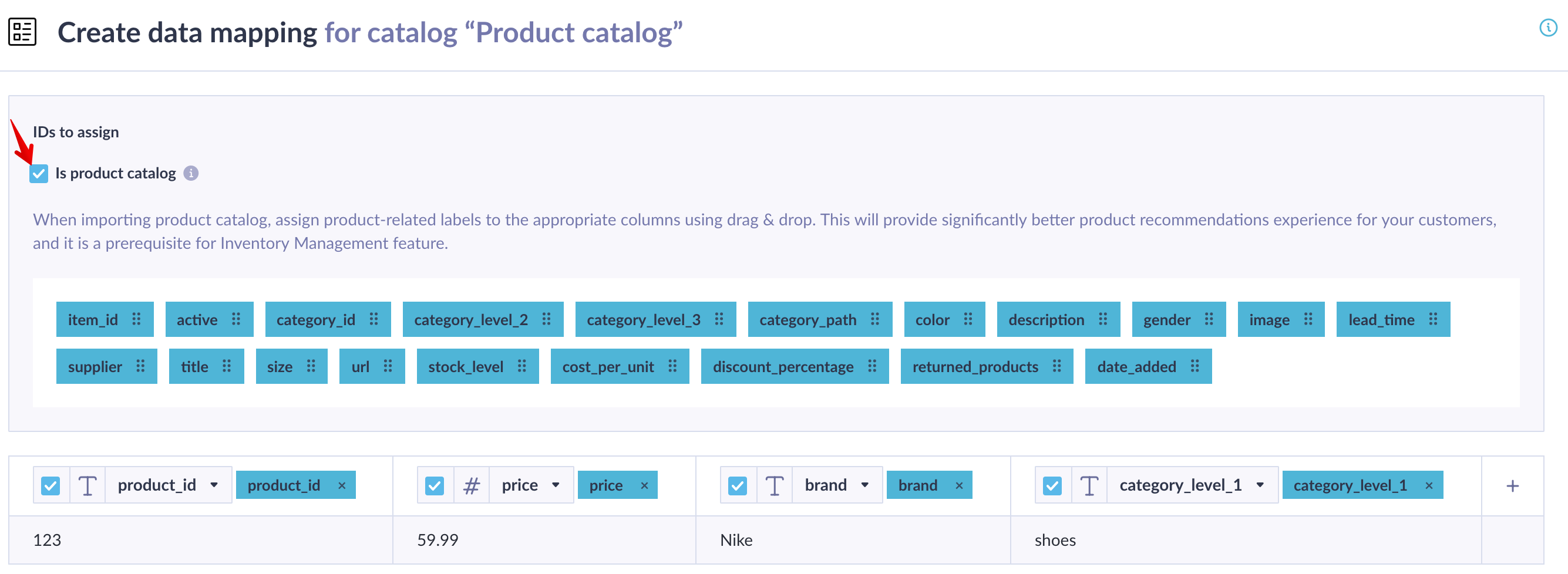

Data mapping

Firstly, check "Is product catalog" at the top if you want to import a product catalog. This will display additional tags that you need to assign to your columns (if they are present in your data).

Secondly, at least one ID tag has to be assigned. There are two IDs for products: item_id and product_id.

The difference is that one product with a single product_id can have multiple variants with different item_ids. For example, you can have a product "iPhone X Case" with a product ID "123". Next, you will also have iPhone X Case in black, white, green, and more. Each of the color variants will have its own item_id but the same product_id.

For general catalogs, item_id is used as the identifier.

See the Catalogs article for more information about each column.

Thirdly, set the correct data type for each column. Read more about navigating the customer data mapping editor in the section above in this article.

Map predefined IDs to your data structure. If you are importing a general catalog, only "item_id" is available and mandatory.

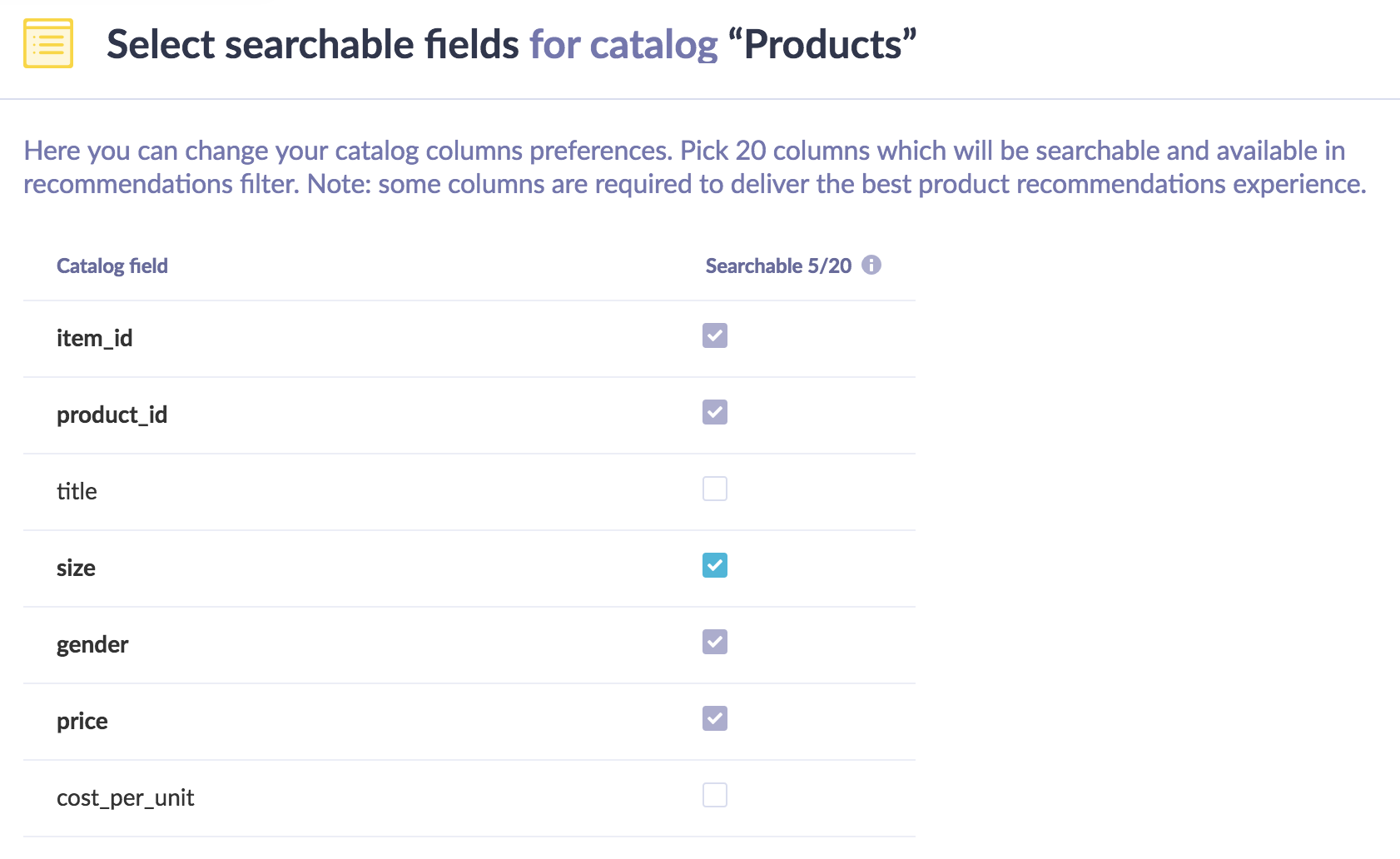

Set searchable fields

If you are importing a catalog for the first time, you have to choose which fields are "searchable." Searchable fields are available in Bloomreach Engagement search, and also recommendations are based only on these columns (this means that if you want to make product recommendations based on brand, for example, this column has to be set as a "searchable field").

- Searchable fields can only be defined during the first import. If you want to add more columns as searchable later, you will have to re-import the data to a new catalog.

- Maximum of 40 columns can be set as searchable and available for recommendations.

- List and JSON fields cannot be set as searchable

Set repeated import (optional)

If you're uploading from a dynamic source (Database, URL, or Bloomreach Engagement), you can schedule a repeated import.

✅ Run the import

Maximum length of catalog item value

The maximum length of the catalog item value is 28672 bytes. If you exceed this length you need to either shorten the value. It is possible to split the longer property into two short ones.

Importing vouchers

The import process for vouchers is explained in the Vouchers article.

Additional resources

Working with CSV

To import historical data into Bloomreach Engagement, it is best to use CSV import. Each event should be imported in an individual CSV file. Each event, i.e., each row in the CSV, needs to contain a customer identifier and a timestamp in the UNIX format.

Customers may be imported to Bloomreach Engagement via CSV

In the first row of the CSV file, we need to specify the identifiers and attributes of customers. These names do not need to match the naming in Bloomreach Engagement (mapping of the columns onto customer identifiers/attributes in Bloomreach Engagement will be shown later).

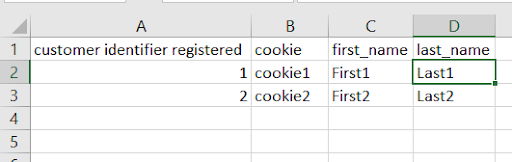

Each cell can only contain a single value - for example, a customer record often has several cookies. However, it is possible to only import 1 cookie in 1 row. To import multiple cookies, we would need to have some other identifier for the customer. The situation would look as follows.

Now customer records with a registered ID equal to 1 would have [cookie1, cookie2].

In the same way, you can also update individual properties of existing customer profiles, i.e., you can only import the relevant columns with the values you wish to update.

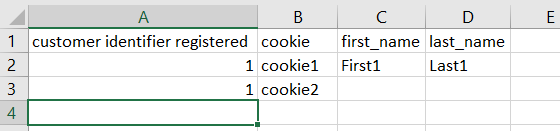

Column names in your CSV import do not need to match the names of customer attributes in Bloomreach Engagement but can be mapped. The following image illustrates the situation - to generate this window in Bloomreach Engagement, go to: Under Data&Assets - imports - new import - Customers.

We can see that one of the columns in the CSV import was called ‘fn’ (firstname). We can click on the field highlighted in yellow and then select the appropriate attribute. In this case, we are assuming that ‘fn’ stands for ‘first_name’. _This column will now be imported as a customer attribute first name.

Technical limitations

Data should be imported in UTF-8 encoding. File size must not be greater than 1 GB. The file cannot contain more than 260 columns. It is recommended not to import more than approximately 1 million rows in a single file.

CSV data source requirements:

- Provide HTTPS endpoint with SSL certificate signed by one of Trusted CAs (trusted CA list)

- Authenticates requests with HTTP authorization header (HTTP Basic Auth described in RFC 2617)

- Output CSV follows the specification of CSV format (as described in RFC 4180)

- Encoding: Allowed encodings are 'utf-8', 'utf-16', 'cp1250', 'cp1251', 'cp1252', 'cp1253', 'cp1254', 'cp1255', 'cp1256', 'cp1257', 'cp1258', 'latin1', 'iso-8859-2'

- Delimiter: Allowed delimiters are ' ', '\t', ',', '.', ';', ':', '-', '|', '~'

- Escape: double quote "

- New line: CR LF (\r\n)

- File size limit 1 GB

- Transfer speed allowing Bloomreach Engagement to download the file in less than 1 hour

Working with XML

XML format supports all import types. The XML format is supported as a copy-paste, manual file upload, import from URL or in file storage (SFTP, Google Cloud Platform, S3) integration. XML is one of the most used data formats, usually when it comes to product catalogs for e-commerce. Adding XML format support will speed up the integration phase and make it cheaper to run and more secure at the same time.

Brief introduction to XML

XML stands for eXtensible Markup Language (similar to HTML). XML serves as a way to store or transport data in separate XML files. Using XML makes it easier for both producers and consumers to produce, receive, and archive any kind of information or data across different hardware or software.

XML documents are formed as element trees. An XML tree starts at a root element and branches from the root to child elements. XML documents must contain one root element that is the parent of all other elements. Moreover, XML elements must have a closing tag "/".

For more information about XML syntax and its usage visit this online guide.

<root>

<child>

<subchild>.....</subchild>

</child>

</root>

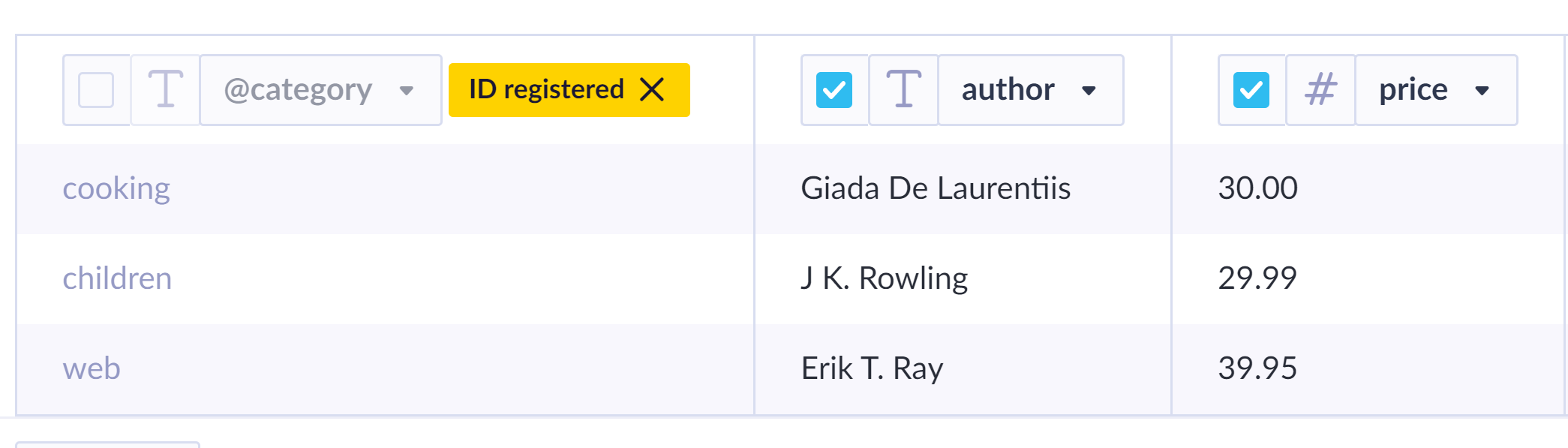

For instance, this XML example would be used to store data for a bookstore company.

<bookstore>

<book category="cooking">

<title lang="en">Everyday Italian</title>

<author>Giada De Laurentiis</author>

<year>2005</year>

<price>30.00</price>

</book>

<book category="children">

<title lang="en">Harry Potter</title>

<author>J K. Rowling</author>

<year>2005</year>

<price>29.99</price>

</book>

<book category="web">

<title lang="en">Learning XML</title>

<author>Erik T. Ray</author>

<year>2003</year>

<price>39.95</price>

</book>

</bookstore>

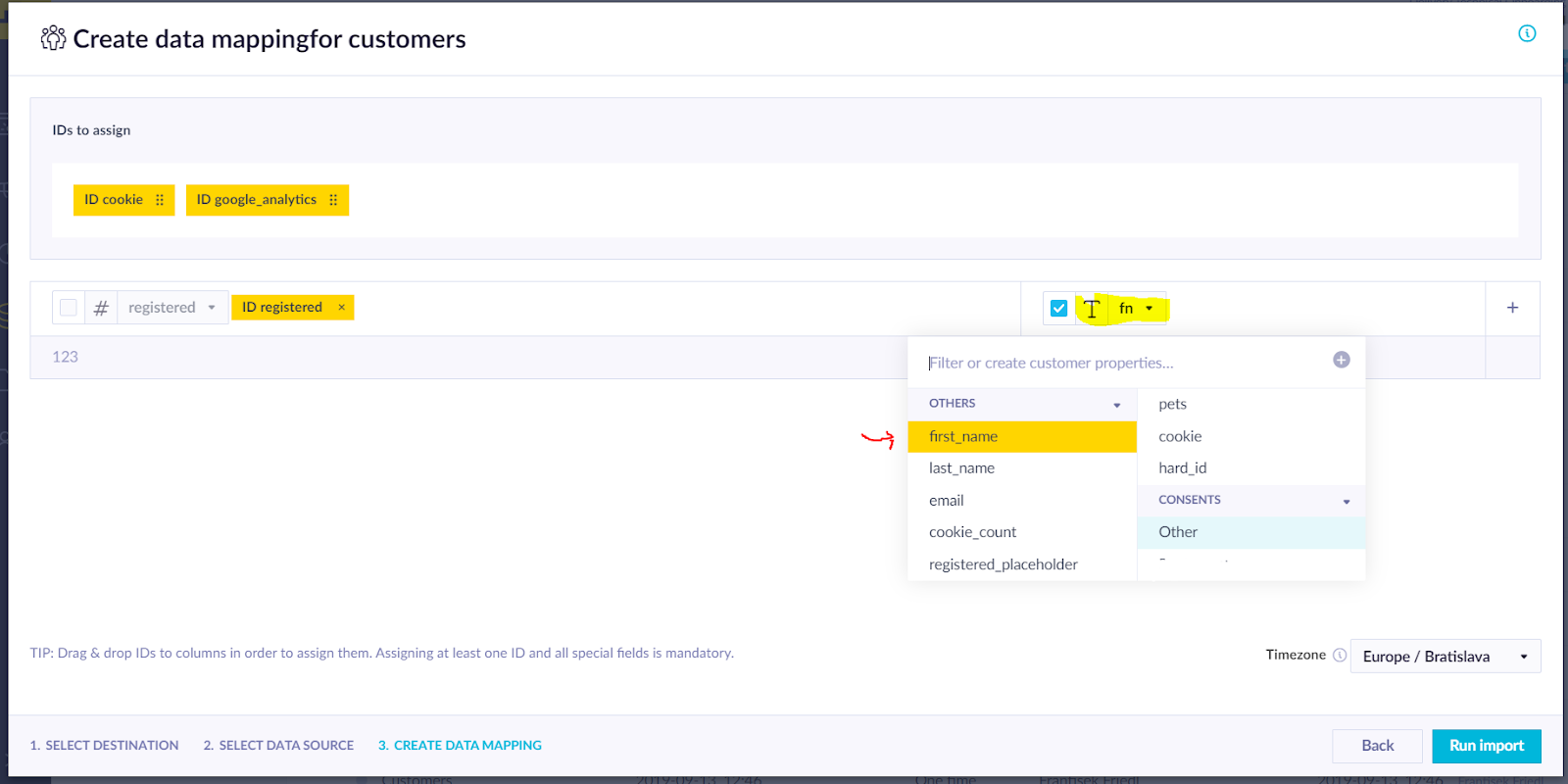

Below you can see the way Bloomreach Engagement would read this XML code.

XML imports copy the existing functionality of CSV imports. XML does not have a flat structure. However, Bloomreach Engagement supports only flat structures in import (for example, CSV, database connections), so the structure transformation (flattening) is needed.

Imports progress tracking

After you set up imported data and start importing them into Bloomreach, you can see a progress bar in the import process overview. There are two stages of handling the data - their importing into Bloomreach Engagement and then processing them into the correct formats and mapping them. It is useful for you when making sure the whole process is actually finished and that all data have been through both stages successfully. Only when both bars are finished the whole import process is considered done as well, and only then can you start working with your data in Bloomreach Engagement.

![]()

Import run history

The import run listing shows jobs completed within the last 30 days.

Notifications for imports

There are two types of Notifications for Imports:

ImportErrorImportRowsDiscarded

The Notification Center sends notifications for Imports (including failed Imports) to everybody having permissionportal-app.imports.update .

The roles which should include portal-app.imports.update are:

- Analyses Editor

- Campaigns Admin

- Campaigns Editor

- Project Admin

- Project Developer

- Technical Support

- Imports Admin

Date format and time format supported

The supported date formats and time formats are explained in the Data Manager article.

Deduplication of events

Every imported or tracked event is automatically checked with all events imported/tracked in the database.

Here are the criteria for detecting identical events:

- events have the same event type

- events have the same timestamp (to the last decimal place)

- events have the same event attribute values together with the same attribute value types (for example, if one attribute is a string, the other has to be a string, too)

Such new identical events will be discarded. Hence, only events with explicitly set event timestamps can be deduplicated. If possible, we recommend always importing/tracking events with event timestamps because this will allow you to safely re-import or retry tracking such events in case they are needed (for example, if the import is partially successful or there was a network problem).

Importantly, deduplication is executed only on a customer level. This means if a customer profile contains some events and you import the same events again, they are deduplicated because they are in the same customer profile. If the imported events exist in different customer profiles, they aren't deduplicated.

Events that will be deduplicated are still triggering Scenarios. The same event imported several times if the on-event trigger is enabled, will trigger the flows several times. That is because the Triggering of Scenarios is "managed" before the de-duplication process. So at the point when the scenarios are triggered based on a tracked/imported event the platform does not know yet if it's a duplicated event or not.

90 days deduplication expiration

Information for any processed event, regardless of its timestamp, is kept for 90 days from when it is processed. If received in this timeframe, it is not processed similarly, but the 90-day window is extended.

In case you import the same events again (including the same timestamp), but this time with a different trigger option for a scenario, deduplication does not allow the event to actually trigger that scenario.

Important

Consent events cannot be deduplicated.

Order of events with identical timestamps

In case some events have identical event timestamps but different attributes (no deduplication) and events are assigned to the same customer profile, the Bloomreach Engagement application doesn't guarantee the order of such events.

Therefore, if the order of events is crucial (for example, for funnel analytics) for your use case, make sure that event timestamps differ by at least one decimal place. Bloomreach Engagement API should accept timestamp precision up to six decimal places.

Automatic trimming and lowercasing of IDs

This feature will trim and lowercase your IDs when they are imported or tracked to Bloomreach Engagement. You must enable it first in settings.

Duplicate identifiers in the import source

Import sources are processed from top to bottom, and in the case of customers and catalogs, each row is an update operation for a record found by a given ID.

-

In the case of catalog imports, the order of rows is respected. In other words, if you import a source that contains lines with duplicate item IDs but different columns, in general, the last occurrence of the row will execute the final catalog update, and therefore that will be the final value.

-

In the case of customer imports: the order of rows is guaranteed as long as they have exactly the same values in all ID columns. Rows using different IDs are processed in an arbitrary order, even if they belong to the same customer.

Delays in the visibility of imported data

Our import system was designed to cope with imports of large data sets in sequential order. We optimized it for throughput instead of reactivity. For this reason, users can sometimes see delays between seeing a finished import in UI and seeing actually imported data.

Here is a simplified description of how our import system works:

Imports from all projects on an instance use the same queue. This means that many large imports in one project may delay imports in other projects.

When you create an import, it is first scheduled into the queue, and it will start once there is an available worker. In general, this scheduled phase should take only a few seconds, but if the instance is busy processing many other parallel imports (for example, dozens), one import must finish before the next one in the queue can start. Because of this queue mechanism, in case of lag, the size of your file has no influence on how fast it will start being imported - the delay is based on your import place in the queue.

You can follow the import and processing phases thanks to the import progress bar. Progress in the import phase indicates your data has started to be loaded into our system. Loading phase time depends on the size of the import and, in the case of external sources (for example, databases), also on connectivity. After the import loading phase finishes, it is further processed by our system, which is visible in the second part of the progress bar. After the processing phase finishes, your data is available in the system.

Timeouts when using HTTP(S) as a source

In order to prevent the situation, when the server doesn't respond, but the import is still in progress and therefore blocks the workers' slots, there is a set timeout.

The first part of the timeout is connect timeout, and it is set to 30 seconds. It is time to open the socket to the server (TCP/SSL). Therefore, if the server is not available, we drop the import.

The second part of the timeout is read timeout. If the socket was opened, this is the time to get the first bytes of the HTTP response to an HTTP request from a remote web server. It is set to 3,600 seconds. If that doesn't happen in the set time, the import is canceled.

Updated 2 months ago