Kafka

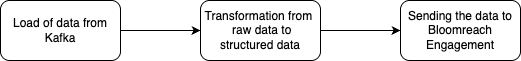

Overview of integration logic

Kafka is a distributed event streaming platform for high-throughput and real-time data processing. Kafka streams atomic, unstructured data, while our platform requires structured event-based data. The goal is to guide you through the integration in a few steps.

We use elastic.io as our middleware. Our Client Services team will help you build the integration. While your team will need to manage the Kafka setup on your side, we will be with you every step of the way.

The logic consists of three essential parts:

This scheme applies to a recurring data sync. For a major initial load of data, we recommend using imports instead.

To learn more about the integration, contact your Bloomreach Engagement representative, such as your CSM.

Load of data from Kafka

Flows in elastic.io consist of connected components. This integration flow starts with the Kafka component, which receives the atomic data input for processing. Learn more about the component and its limitations in the documentation.

Transformation from raw data to structured data

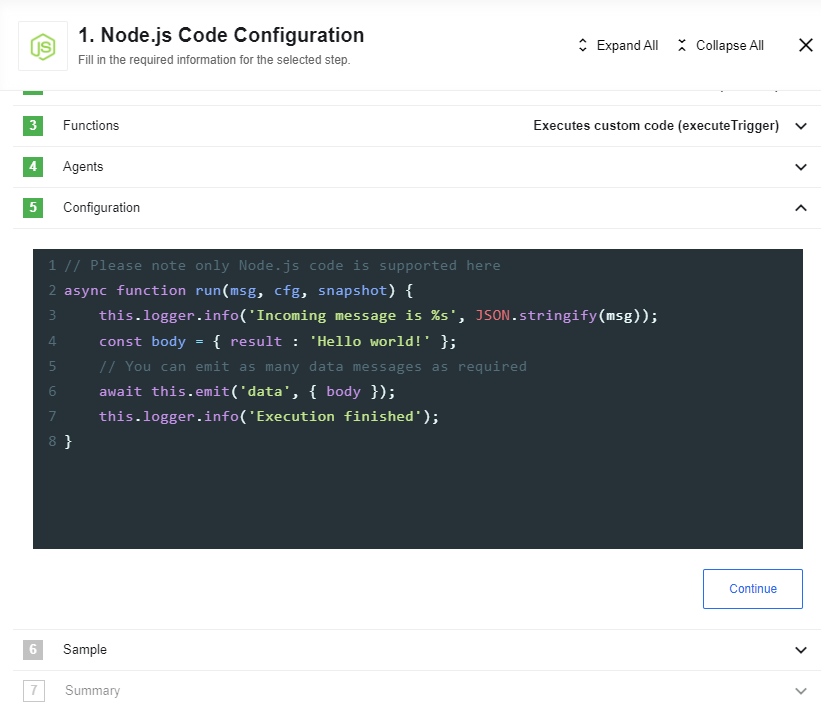

Bloomreach Engagement accepts only structured data compatible with the structure of our platform. The component used for data transformations is a Code component.

The transformation takes your atomic inputs and selects the data you choose to send to Bloomreach Engagement. This data is then transformed or structured to fit your data structure.

In this step, Bloomreach Client Services is involved and will work with you on the implementation.

Sending the data to Bloomreach Engagement

After the transformation, the data is sent to the Bloomreach Engagement API. This is done with the REST API component in elastic.io.

This step activates your data in Bloomreach Engagement and makes it available for personalized campaigns and insightful analytics.

For a major initial load of data, we recommend using imports instead.

Limitations

To find limitations for each part of the integration, see:

For more information, contact your Bloomreach representative, such as your CSM.

Updated 12 months ago