Databricks

The Databricks native integration connects your Databricks data lakehouse directly to Bloomreach Engagement, enabling automated data imports without manual file transfers or third-party middleware.

This integration supports importing customers, events, and catalogs with automatic synchronization as frequently as every 15 minutes.

Why use the Databricks integration

The integration provides several key advantages for enterprise data management:

Automated data imports: Set up scheduled, near real-time data transfers from Databricks to Bloomreach Engagement. Your campaigns and analytics always use up-to-date information without manual intervention.

Reduced complexity: Eliminate time-consuming and error-prone SFTP file transfers or custom connectors. The native integration handles data movement automatically.

Lower integration costs: Avoid extra expenses for middleware or manual processes. Reduce setup time and ongoing maintenance requirements.

Better support for AI features: Ensure Bloomreach's AI-powered tools, such as Loomi AI, have access to the latest customer and product data for more accurate insights and automation.

Enterprise-ready: The integration supports organizations already using Databricks or migrating to it, accommodating advanced analytics and marketing use cases.

How the integration works

The integration connects natively to Databricks and requires a one-time connection setup. Once configured, it can import from tables, views, and user-defined query results.

When importing from tables or views with change tracking enabled, the system automatically imports subsequent changes from Databricks as often as every 15 minutes to keep your Engagement data current.

Prerequisites

Access requirements

You need a Databricks account with account admin privileges to set up the integration. The integration requires a Databricks user to have access to the relevant account and database.

Data format requirements

Bloomreach Engagement has flexible data format requirements. During import setup, you map the source data format to the relevant data structure.

The following columns must be present in source tables or views:

| Data category | Required columns | Optional columns |

|---|---|---|

| Customers | ID to be mapped to the customer ID in Engagement (typically registered) | Timestamp to be mapped to updated_timestamp |

| Events | ID to be mapped to the customer ID in Engagement (typically registered) Timestamp | |

| Catalog | ID to be mapped to the catalog item ID in Engagement (typically item_id) |

Supported attribute data types:

- Text

- Long text

- Number

- Boolean

- Date

- Datetime

- List

- URL

- JSON

Delta update requirements

The integration supports delta updates from tables to import changes in the source data since the previous import. Delta updates for Views aren't supported.

This feature requires delta.enableChangeDataFeed to be enabled on the source table in Databricks.

Enable the Change Data Feed using the following command:

ALTER TABLE myDeltaTable SET TBLPROPERTIES (delta.enableChangeDataFeed = true)

For more details, see the Change Data Feed documentation by Databricks.

Customer update timestamp

As a best practice, add an extra column to your customer table with a timestamp indicating when customer properties were last updated. The timestamp should be set to the current time on every change of customer data that gets imported to Bloomreach.

Map this column to updated_timestamp when setting up the import. This prevents the delta update from overwriting customer property values that were tracked in Engagement since the previous import.

Set up the Databricks integration

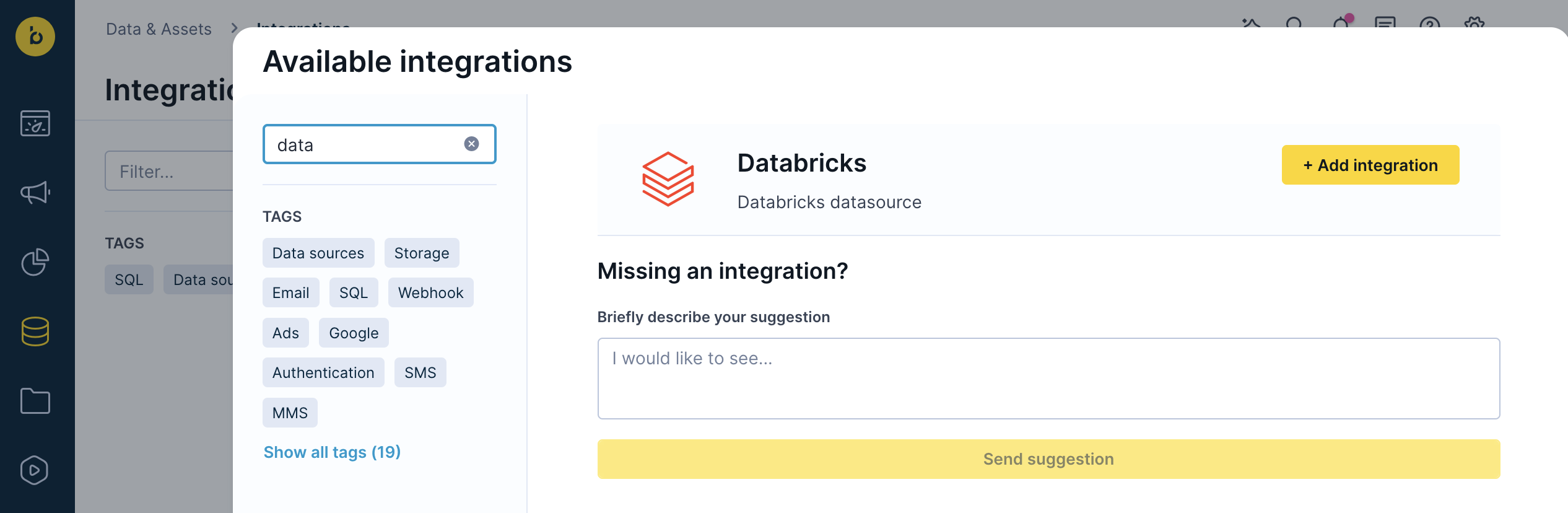

-

Go to Data & Assets > Integrations and click + Add new integration in the top right corner.

-

In the Available integrations dialog, enter "Databricks" in the search box.

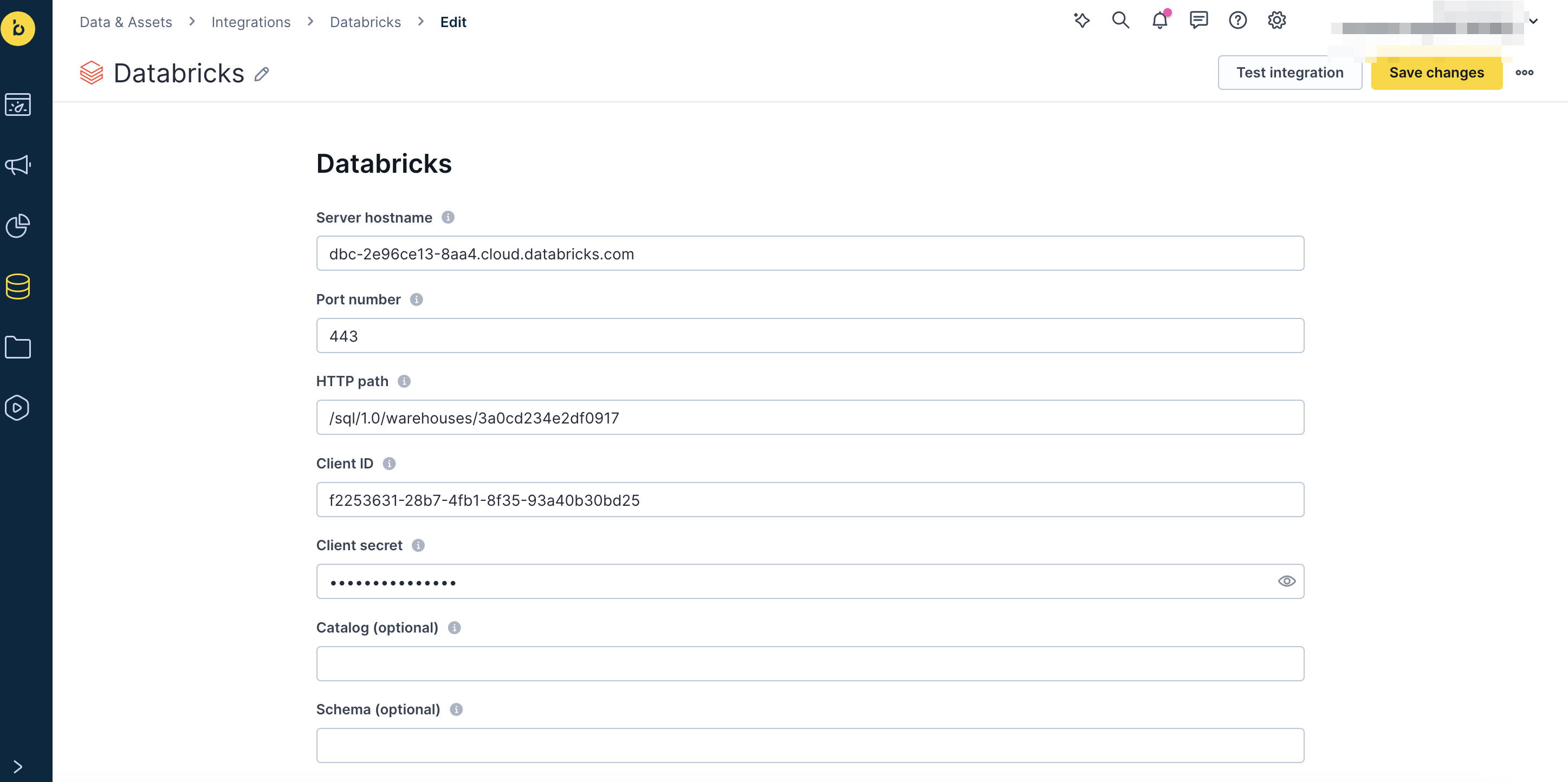

- Configure your Databricks connection by filling in the following fields (tooltips guide you with the correct format):

- Server hostname

- Port number

- HTTP path

- Client ID

- Client secret

- Catalog (optional)

- Schema (optional)

- Once you fill in all mandatory fields, click Save integration.

Important

Removing the Databricks data source integration from an Engagement project will NOT delete any data already imported from Databricks. However, this will cancel any future delta updates using the integration.

Import data from Databricks

The import process follows the same general steps for all data types, with specific configuration differences outlined below.

General import process

-

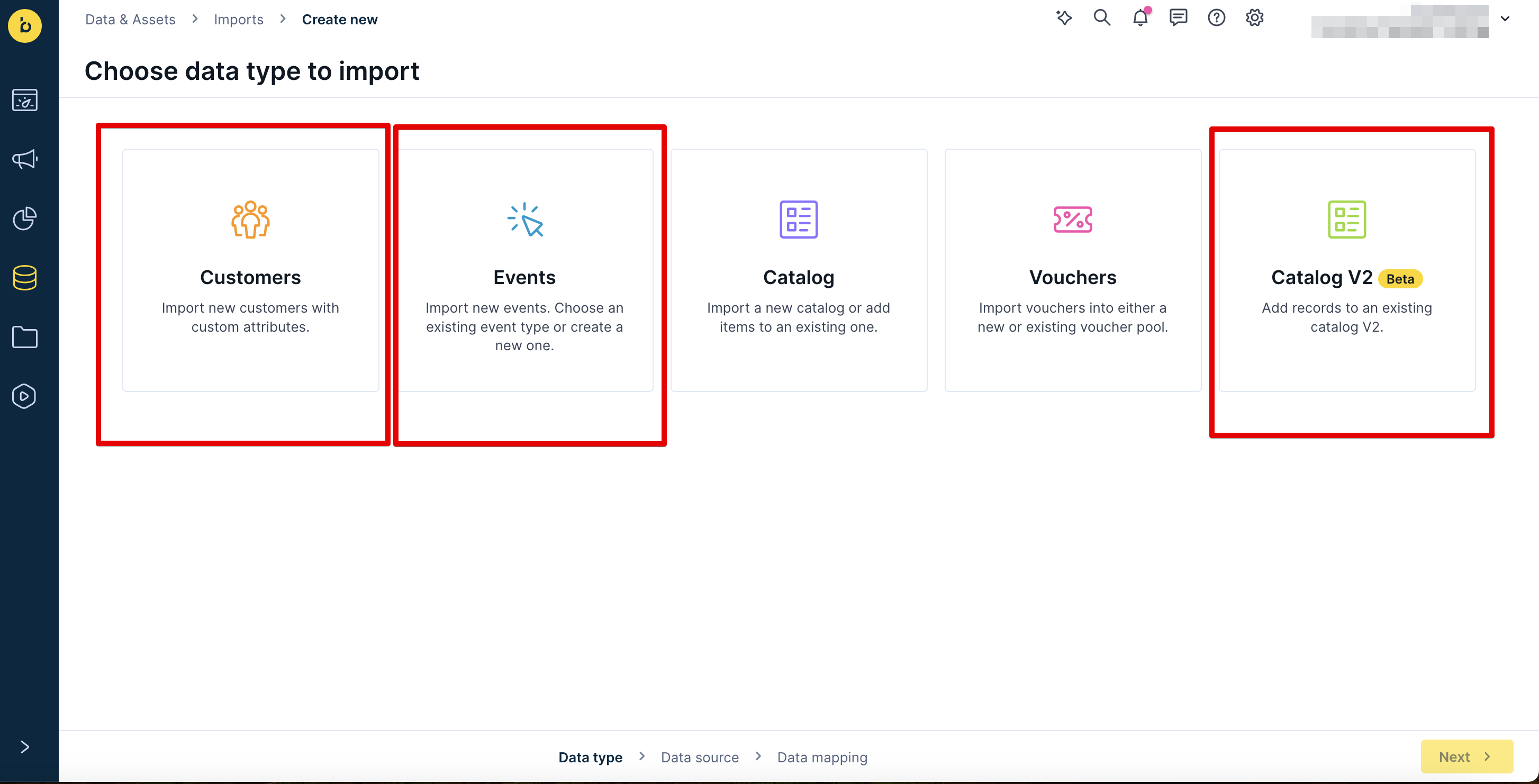

Navigate to Data & Assets > Imports and click + New import.

-

Select your data type (Customers, Events, or Catalog) and complete any type-specific selections.

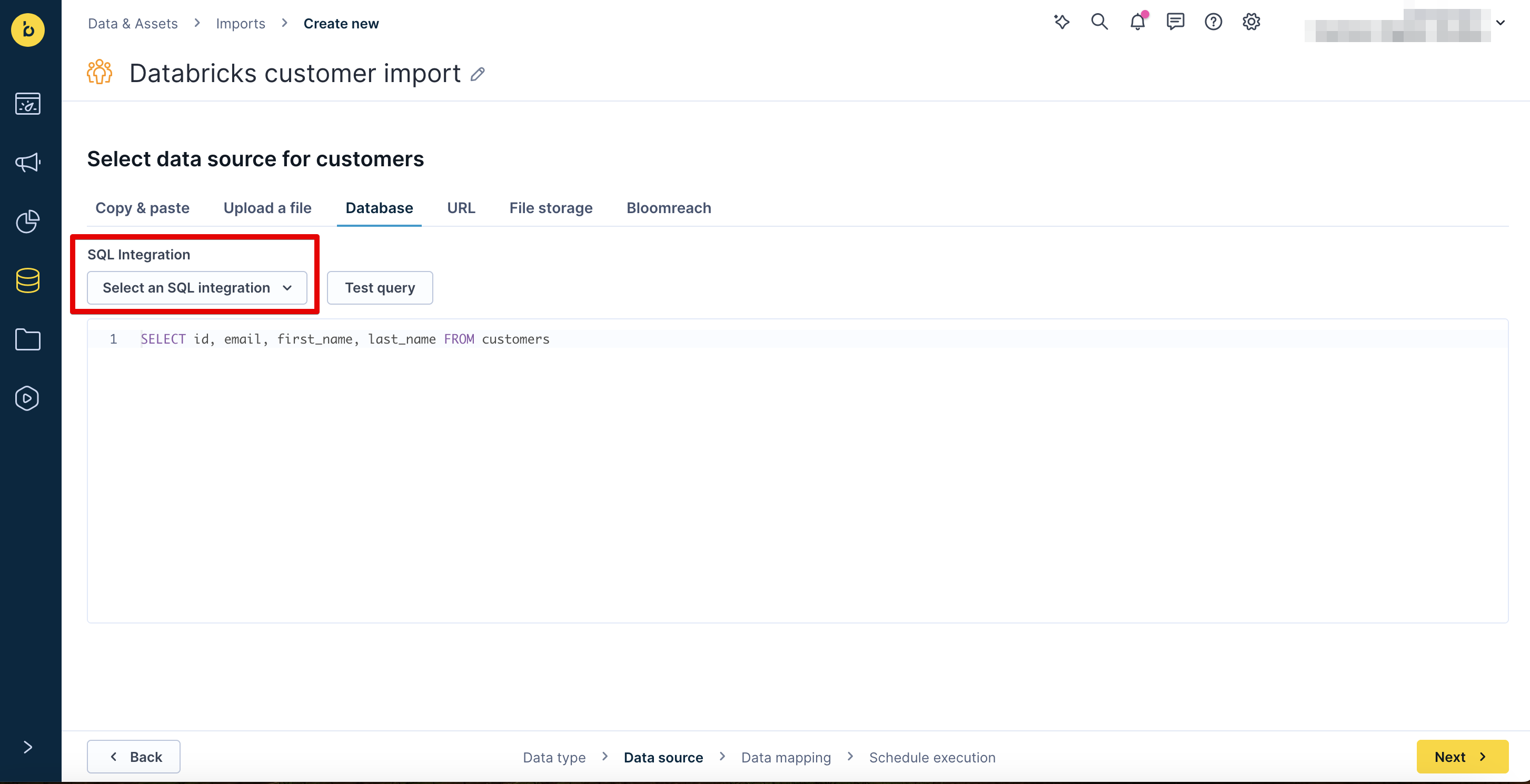

- Enter a name for the import (for example, "Databricks customers import").

- On the Database tab, in the SQL Integration dropdown, select the Databricks integration.

- Select Table, then select the table to import from in the Source Table dropdown.

- Click Preview data to verify the data source is working, then click Next.

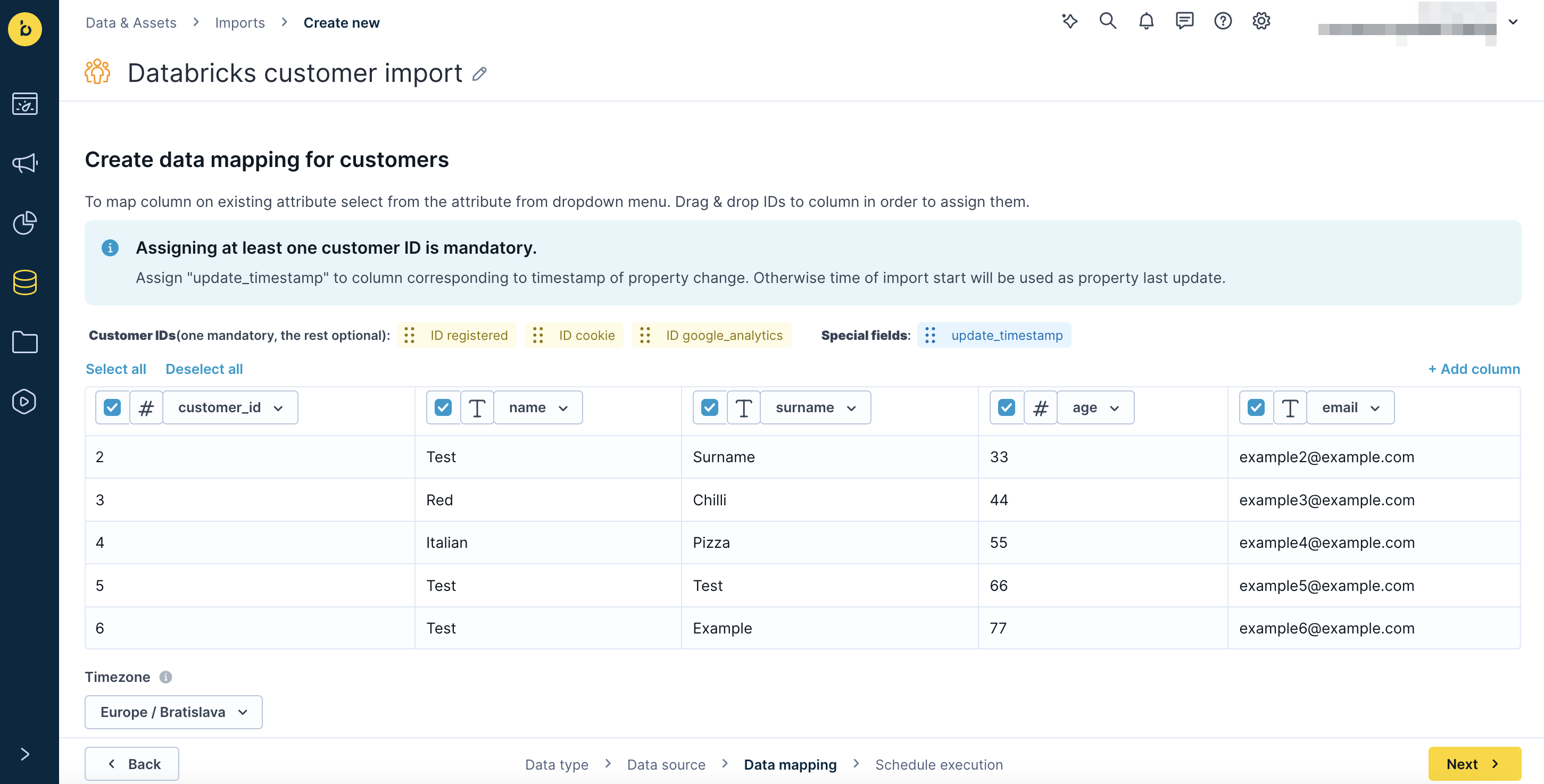

- Map your Engagement ID to the matching column in the Databricks table using drag and drop, then click Next.

- Configure your schedule execution and click Finish to start the import.

Data-specific configurations

Customers

Step 2: Select Customers.

Step 7: Map your Engagement customer ID (typically ID registered) to the matching column in the Databricks table.

Additional mapping: Optionally, map the update_timestamp field to the matching column if you don't want to overwrite customer property values tracked since the previous import.

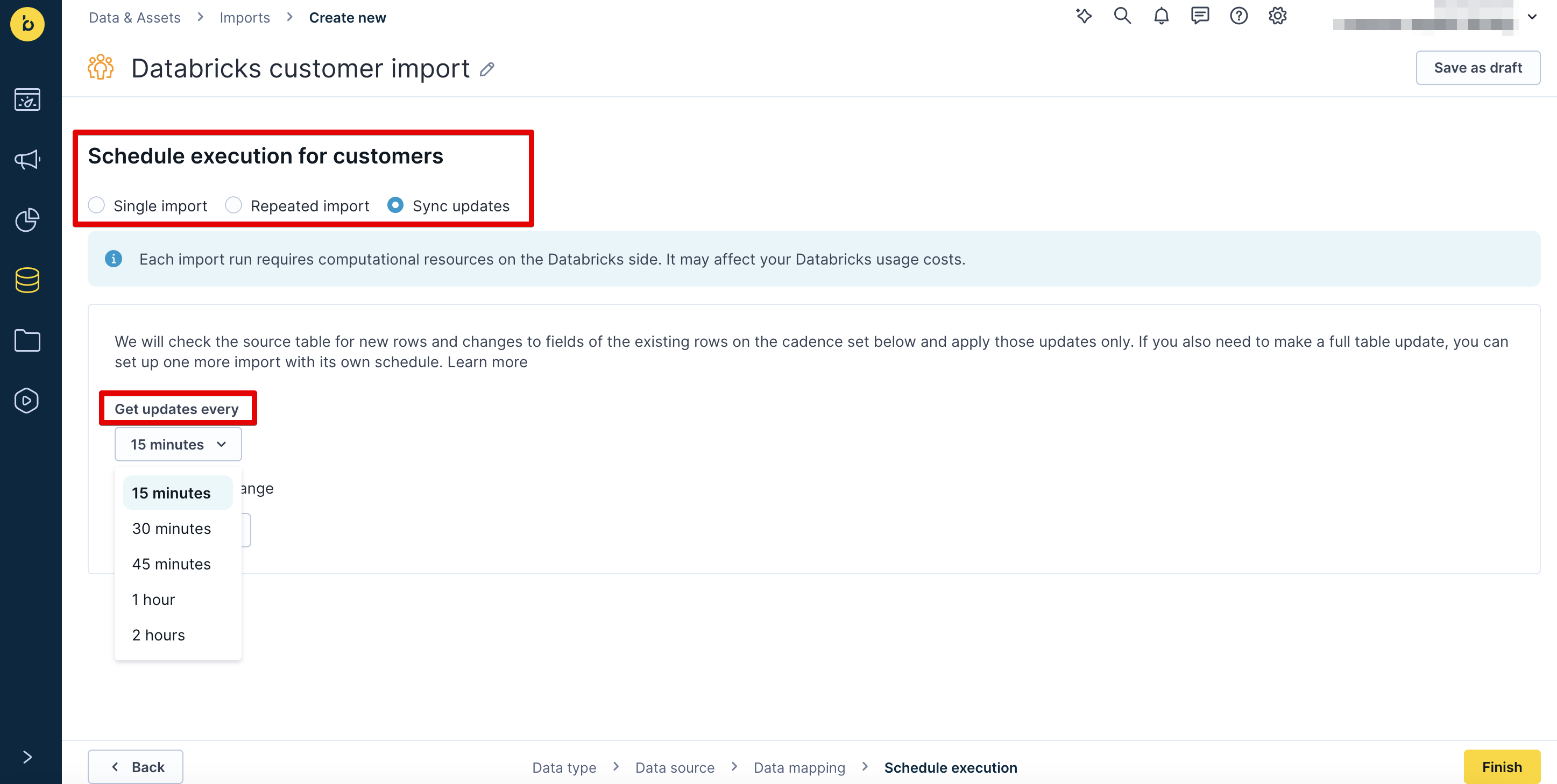

Schedule options:

- Single import: A one-off import of all records

- Repeated import: A scheduled, recurring import of all records

- Sync updates: A scheduled, recurring delta import of changes since the previous import

Schedule frequency: Every 15/30/45 minutes, 1 hour, or 2 hours, with optional time range (start and end dates).

Important

Deleting imported customer profiles in an Engagement project will NOT trigger the recreation of those customer profiles during the next delta update unless the source record has changed.

Events

Step 2: Select Events and select or enter the event type to import (for example, view), then click Next.

Step 7: Map your Engagement customer ID (typically ID registered) to the matching column in the Databricks table.

Schedule options: Same as customers (Single import, Repeated import, Sync updates).

Schedule frequency: Same as customers.

Recommendation: Use Sync Updates to keep both platforms synchronized. This approach generates computation costs on your side, so use it only for the most crucial data. Use Single imports for fixed data that doesn't change and doesn't need regular imports.

Important

- You can import one event type per import (for example,

view). Import multiple event types by setting up separate imports for each type.- Events in Bloomreach Engagement are unchangeable. Delta updates import new events but won't update previously imported events.

Catalogs

Step 2: Select Catalog v2 and select the catalog to import to, then click Next.

Note

Contact your Customer Success Manager to enable Databricks support for catalog imports in your project.

Step 4: Under Import settings, select the Type:

- Full feed: Imports all data and replaces all existing records with the imported data

- Delta feed (Replace items/rows): Replaces existing records based on matching IDs or adds new records

- Delta feed (Partial): Partially updates data using columns of your data source without overwriting existing data if no matching column is found or adds new records if no matching IDs

Step 7: Map your Engagement item ID (typically item_id) to the matching column in the Databricks table.

Schedule options:

- Single import: A one-off import of all records

- Repeated import: A scheduled, recurring import of all records

- Sync updates: A scheduled, recurring delta import of changes since the previous import (only available if you selected Delta feed as import type)

Schedule frequency: Same as customers and events.

Important

Deleting imported catalog items in an Engagement project will NOT trigger the recreation of those catalog items during the next delta update unless the source record has changed.

Export data to Databricks

Exports can be done only in scheduled mode.

-

Export your data from Bloomreach Engagement using the Exports to Google Cloud Storage (GCS) option.

-

Keep your data in a GCS-based data lake.

-

If you need to import data from files into Databricks database tables, use LOAD. For more details, see the overview article by Databricks.

-

Trigger automatic loading of files from Engagement by calling the public REST API endpoints. For more details, or if you want to bulk load data from Google Cloud Storage, read this article by Databricks.

Delete data from Databricks

Using our API, you can anonymize customers individually or in bulk. If you want to delete customers, you can mark them with an attribute to be filtered out and delete them manually in the UI.

You can also delete events by filtering only in the UI. Catalog items can be deleted using our Delete catalog item API endpoint.

Example use cases

One-time imports: Importing purchase history for historical analysis and segmentation.

Regular delta imports: One-way synchronization of customer attributes to ensure marketing campaigns use the most current customer data.

Limitations

The Databricks integration's delta updates don't support "delete" database operations. Deleting a record in Databricks that was previously imported into Engagement won't cause that record to be deleted in Engagement on the next sync.

Support resources

For additional guidance on importing data to Engagement, refer to the following documentation:

Updated 8 months ago