A/B Testing FAQ

Why can customers belong to both a Variant and Control group when A/B testing? Why do I have the "mixed" group in my customer AB test segments?

Every time a new customer is shown your campaign, which is being A/B tested, they are assigned either to the Variant or Control group. They will always be assigned to the same group if they come again.

However, it might happen that the customer will come using a different device or an incognito window next time. In that case, they are seen as a new customer with a new cookie and hence can be assigned to a different group.

Once that customer logs in (such as during a checkout process), Bloomreach Engagement identifies them, and it is only now the system knows that it is actually the same person. Bloomreach Engagement will automatically merge these seemingly different customers into one.

If they were assigned to different groups in those two sessions, we want to exclude them from any A/B test evaluation. Therefore, we create the "Mixed" segment in our evaluations, where these "merged" customers fall.

Why did a customer get into the "mixed" group after changing the A/B test distribution from/to 100% for one variant?

There is an edge case in experiments and weblayers when the distribution is set to 100% for one variant. Then, this variant is shown to customers no matter what their previous group was. Additionally, the attribution for customers seeing this variant with 100% distribution is not saved. This means that if the distribution is modified later on (for example, to 50/50), the customer might change group and see another variant.

Why do I see fewer customers with event banner.action = "show" than customers with ab test events for the same variant?

The event banner.action = "show" is usually tracked only once the weblayer is fully displayed. If the page is reloaded before the display of the web layer, then no banner event is tracked.

The event ab test is tracked when customers are first assigned to some variant regardless of what is displayed on the web page.

For this reason, you can sometimes see fewer customer profiles with at least one 'banner show' variant than customer profiles with an ab test event of the same variant. The longer it takes for the weblayer to display from the trigger action, the higher the chance for the user to reload the page and, therefore, not track the banner show event.

Thus, we recommend keeping an eye on the weblayer variant setting Show After or the total time it takes to render your weblayer.

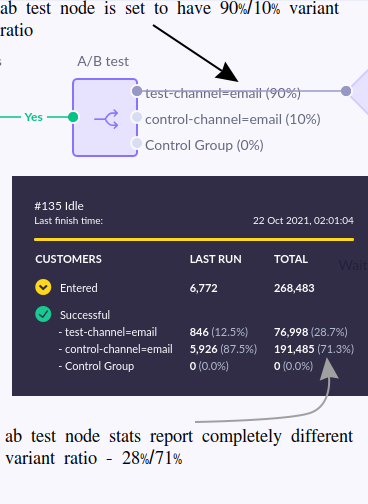

Why do I see a different scenario A/B test ratio in node stats than in node configuration?

The A/B test variant is always selected only once for each customer profile. In the case of scenario A/B test node, this means that the variant is assigned only when the customer arrives at the node for the first time.

However, the customer profiles can pass through the scenario multiple times, such as when you use a repeated scenario trigger. After each pass-through node, the customer's A/B test variant will be reused.

Campaign node stats are reporting customers passing through the node. For this reason, you can see a different variant ratio in the A/B test node stats compared to the node A/B test configuration. See the example picture below.

Most often, the root cause is the scenario logic, which is disproportionally passing customers from one variant over other variants into the A/B test node.

Why do A/B nodes with 50/50 splits do not render exact 50/50 split numbers?

You can never get the exact number based on the defined split. Imagine flipping a fair coin, where the chances of getting a head or a tail are equal. If you toss the coin 1000 times, it's unlikely you will get exactly 500 heads and 500 tails. The same principle applies when selecting a variant for a customer - it needs to be random, just like flipping a coin. Each preview involves a random selection, making it different every time. To achieve 100% precision, you can add a "Customer Limit Node" after the A/B Split test to ensure a precise 50/50 split. However, even then, there may still be a difference, as not all emails will be enqueued or delivered.

Why do I see different numbers in the predicted flow of a scenario every time I preview the scenario in the Test tab?

Predicted flows are estimates and are likely to change with each preview rendering due to the dynamic nature of the project's customer data.

Updated 5 months ago