(old) Single Opt-In Subscription Banner with Automated Consent Management

This guide will help you understand:

- What the Use Case does

- How to set up the Use Case

- How to further customize the Use Case

- How to evaluate the dashboard

This use case is part of Engagement’s Plug&Play initiative. Plug&Play offers impactful use cases like this one, which are ready out of the box and require minimum setup on your side. Learn how you can get Plug&Play use cases or contact your Customer Success Manager for more information.

What this Use Case does and why we developed it

Problem

There are a number of challenges marketers struggle with when aiming to acquire new newsletter subscribers. Customers inputting their email in the wrong format or being confused about what they are actually signing up for are some examples of those challenges.

Solution

To address these kinds of challenges, a single opt-in banner provides a way to ensure that the visitors at your website enter their email addresses in the right format when subscribing to a newsletter, which should lead to increased newsletter subscriptions. Banners such as this have yielded up to a 69% improvement in leads captured for Bloomreach Engagement clients.

This use case

For this reason, we developed the Single Opt-In Subscription Banner with Automated Consent Management use case, as a ready-to-use solution. The web layer included in it ensures that only an email address with a usual format of [email protected] gets through.

The web layer also provides you with an opportunity to explain what it is that the customer is signing up for and the benefits associated with it. Furthermore, the consent management is fully automated, with Bloomreach Engagement updating a customer’s consent event and Newsletter consent attribute once the customer submits their email.

What is included in this use case:

- Custom animated single opt-in subscription banner

- Custom evaluation dashboard

1. Set up the Use Case

(1) Check the requirements

Prerequisites:

- Bloomreach engagement modules:

- Weblayers

- Customer attributes:

- Consent tracked:

- Newsletter (in case you use a different naming, feel free to change it within the UC)

Address any discrepancies if needed.

(2) Choose and customize the web layer

The weblayer included in this use case has the format of a small dialog window and it is customizable on different levels. Locate the web layer in the use case initiative and open it for customization according to your needs.

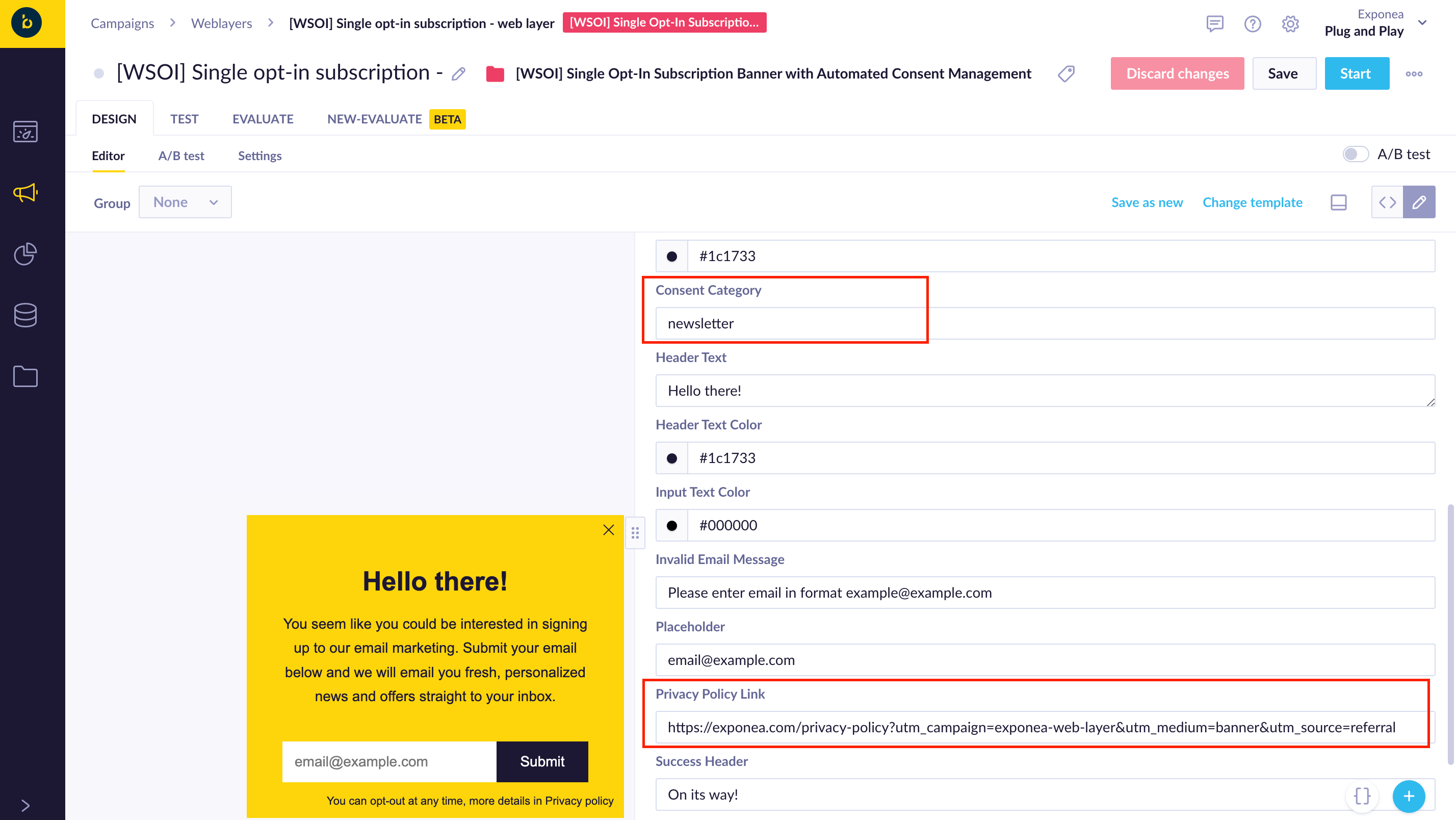

Design > Editor > Visual (parameters) editor:

By adjusting the parameters, here you can change:

- Visual aspects - the text and the color.

- Consent Category - choose which consent category should be tracked to your customers when subscribing through the banner

- Privacy Policy link - update with your link

The consent tracking is currently set to also track the event attribute ‘data_source’ with value ‘single opt-in weblayer’ (row 27 in JavaScript).

Design > Editor > Code editor:

HTML code / CSS code / JS code

- Adjust the code parameters according to your preferences.

Design > Settings

Select appropriate values

- Show on - select the web pages where you want to display the banner

- Target devices - consider if you want to display it on both mobile and desktop devices

- Consent category - select an appropriate consent category

- Audience - possibility to narrow down your audience with filtering. Do not forget to exclude the customers that already have the consent.

(3) Test the Banner

It is highly recommended to test the use case before deploying it. To do so, follow the steps below. You can also find out more about Testing Weblayers in our documentation.

A quick preview is available directly in the web layer file:

- Design tab - Onsite preview to see the preview on different devices

- Test tab for a larger preview

To test the web layer in “real” conditions, you can use one of the following 2 approaches:

- Go to the Settings of the web layer and define ‘Show on’ to match a testing page (not accessible to the public). The banner will be shown to everybody on this webpage. You can then navigate to this web page to preview it.

- Go to the Settings and in ‘Audience’ select ‘Customers who...’. Filter only the testers from your customer database, e.g. by filtering by the cookies of testers - you and your colleagues.

Click on the ‘Start’ button to launch the web layer with the restricted settings as described above. Once the test is finished, click ‘Stop’. We recommend testing on different browsers and devices.

(4) Run the Weblayer

Once you are satisfied with the testing results, configure the web layer to match the desired display settings in the Settings tab and click on the ‘Start’ button to launch the web layer.

(5) A/B test

A/B test is necessary to evaluate whether the use case is performing, and most importantly, if it is bringing extra revenue or subscriptions. We can drive conclusions from the A/B test once it reaches appropriate significance (99% and higher).

This use case is not currently set up with an A/B test. However, we strongly recommend testing more Variants (e.g. wording, page placement etc.) to optimize the performance. You can find out more about setting up the A/B test in our guide.

To achieve the desired level of significance faster, you can opt rather for a 50/50 distribution. Once the significance is reached and the use case is showing positive uplift, you can:

- Minimise the Control Group to 10% and continue running the A/B test to be able to check at any given moment that the uplifts are still positive.

- Turn off the A/B test but exercise regular check ups e.g. turn on A/B test every 3 months for a period needed to achieve significance to be sure that the use case is still bringing positive uplifts.

Remember

The use case performance can change over time. We recommend regular checking instead of proving the value only in the beginning and then letting the use case run without further evaluation.

(6) Evaluate on a regular basis

The use case comes with a predefined evaluation dashboard. There might be some adjustments necessary in order to correctly display data in your projects.

Adjustments to consider:

- Consent target - add the corresponding consent category collected with this UC and optionally add ‘data_source’ attribute equals ‘single opt-in web layer’ if you are collecting it

- Weblayer target - check if the ’banner_id’ corresponds to the scenario

- Metrics in the dashboard - possibility to adjust the display e.g. comparison to last 14 days

Check the evaluation dashboard regularly to spot any need for improvements as soon as possible. All needed metrics definitions are included directly in the dashboard.

If you decide to modify the scenario (e.g. rename the banner), some reports and metrics in this initiative need to be adjusted to show correct data.

2. Suggestions for custom modifications

While this use case is preset for the above specification, you are able to modify it further to extract even more value out of it. We suggest the following modifications, but do not refrain from being creative and thinking of your own improvements:

- Try different A/B tests - possibility to run more variants at the same time and test different banner designs and website placements to maximize the conversion rate.

- Use this subscription method alongside a discount to drive up your newsletter subscription rates as well as increase the revenue.

3. Evaluate

Key metrics calculations

The attribution model used for revenue attribution takes into consideration all the purchases made within 48 hours since email open/click. This time frame is called the attribution window.

Go to our Evaluation Dictionary article to understand different metrics in your evaluation dashboard! The article covers Benefit/Revenue calculations, Uplift calculations and Campaign metrics relevant for this Use Case.

Updated 5 months ago